Creating Realistic Test Data with Python Faker

Testing data systems, processing applications and data management systems require data. There are a few ways to get test data. Generate your own or use some other dataset in the format you need. What if you're looking for specific data types with many rows to test that you can put in a git repo without taking up an storage?

Your choices:

- Look for downloadable dataset from many places like Kaggle then import

Write your own python script to generate data.

- Use pandas + numpy to create a simple data set

- Use pandas + python's faker module

Using pandas + numpy to create a fake dataset:

To simplify we are loading it to a csv file however depending on what system you want to connect to you can connect to a database using python's many database modules.

import pandas as pd

import numpy as np

import sys

import time

# Create Fake data

def get_dataset(size):

df = pd.DataFrame()

df['game'] = np.random.choice(['GameA', 'GameB', 'GameC','GameD', 'GameE'], size)

df['score'] = np.random.randint(1, 9000, size)

df['team'] = np.random.choice(['taper1', 'bravo7', 'M4','bluetree', 'tack satch', '21 jump'], size)

df['win'] = np.random.choice(['yes', 'no'], size)

df['prob'] = np.random.uniform(0, 1, size)

return df

# variables

size = 1_000_000 #! over 100 million rows laptop gets angry

FTIME = time.strftime('%b_%Y%d%H%M%S')

FNAME = "../data/fake_data_" + FTIME + ".csv"

print("\nCreating fake dataset with", size,"rows")

# Generate data with n rows.

df = get_dataset(size)

head1 = df.head()

print("\nSummary:\n", head1.to_string(index=False), "\n\nWriting file:", FNAME)

# Write file

df.to_csv(FNAME, index=False)

print("\nCreated file:", FNAME, "\n" "With", size," rows")

sys.exit()

Running it with a million rows took 3 secs.

sh-3.2$ time python3 fake_data_quick

Creating fake dataset with 1000000 rows

Summary:

game score group win prob

GameE 3662 M4 yes 0.980694

GameD 2862 taper1 yes 0.707455

GameE 4118 tack satch yes 0.859279

GameB 5696 bluetree yes 0.309197

GameE 4580 taper1 no 0.396701

Writing file: ../data/fake_data_Feb_202316124322.csv

Created file: ../data/fake_data_Feb_202316124322.csv

With 1000000 rows

real 0m3.883s

user 0m3.915s

sys 0m0.369s

Now I wanted a wide column with different data types and I could create my own in numpy like above or I could use faker module in python.

First attempt I made it so I could create files in different formats. Maybe I am testing some processing system, ETL or whatever?

- CSV

- Parquet

- JSON

#! /usr/local/bin/python3

import pandas as pd

from faker import Faker

import argparse

import sys

from tqdm import tqdm

# This script generates data in json, csv or parquet format

# mblue Feb 2023

parser = argparse.ArgumentParser()

parser.add_argument('--file', '-f', nargs='*', dest="data_file", help='name for the file -e user_data.parquet')

parser.add_argument('--records','-r', nargs='*', dest="num_records", type=str, help='Enter the amount of records like 100 = 100 rows of data')

parser.add_argument('--format', dest="format_name", type=str, help="Specify the format of the output")

parser.add_argument('--append', '-a', help='Uses 5.7 Grant Syntax', action="store_true")

args = (parser.parse_args())

# arg conditions

if args.data_file:

FNAME = ''.join(args.data_file)

else:

# Setting default value

FNAME = "fake_user_data"

if args.num_records:

REC = ''.join(args.num_records)

else:

REC = 100

# limited error handling

# *TODO: Better handling

if args.format_name is None:

print("Needs 'csv, json or parquet' format argument:\n\

Usage is: python3 data_generator --name mytestdata --records 1000 --format parquet")

sys.exit()

if args.format_name:

FORMAT = ''.join(args.format_name)

print("\nGenerating Fake Data with", '\'' + REC + '\'', 'records.... \n')

# Create an instance of Faker

fake = Faker('en-US')

#CPU_CORES = cpu_count() -1

# Create a pandas DataFrame

# To create email addresses

first_name = fake.first_name()

last_name = fake.last_name()

REC = int(REC)

# Generate Fake Data

def create_df():

df = pd.DataFrame()

for _ in tqdm(range(int(REC)), colour="#18F6F6"):

first_name = fake.first_name()

last_name = fake.last_name()

gamer_id = last_name + str(fake.random_int()) #* String to cagonate pw

df = pd.concat([df, pd.DataFrame({

'Email_Id': f"{first_name}.{last_name}@{fake.domain_name()}",

'Name': first_name,

'Gamer_Id': gamer_id,

'Device': fake.ios_platform_token(),

'Phone_Number' : fake.phone_number(),

'Address' : fake.address(),

'City': fake.city(),

'Year':fake.year(),

'Time': fake.time(),

'Link': fake.url(),

'Purchase_Amount': fake.random_int(min=5, max=1000)

}, index=[0])], ignore_index=True)

return df

df = create_df()

def head_it():

h1 = df.head(3)

print("\nSample Data:\n", h1.to_string(index=False),"\n")

#Global message

def Message1():

MESSAGE = f"\nCreated file: " + FNAME + "\nWith " + str(REC) + " records of data\n"

print(MESSAGE)

def Message2():

MESSAGE = f"\nAppended to file: " + FNAME + "\nWith " + str(REC) + " more records of data\n"

print(MESSAGE)

# Write to CSV

if args.format_name == "csv":

if args.append:

head_it()

FNAME = FNAME + ".csv"

with open(FNAME, 'a') as f:

df.to_csv(FNAME, mode='a', index=False)

Message2()

sys.exit()

else:

head_it()

FNAME = FNAME + ".csv"

df.to_csv(FNAME, index=False)

Message1()

sys.exit()

# Write to Parquet

if args.format_name == "parquet":

if args.append:

head_it()

FNAME = FNAME + ".parquet"

df.to_parquet(FNAME, engine='fastparquet', append=True)

Message2()

sys.exit()

else:

FNAME = FNAME + ".parquet"

df.to_parquet(FNAME)

head_it()

Message1()

sys.exit()

# Write to Json

if args.format_name == "json":

FNAME = FNAME + ".json"

df.to_json(FNAME, orient='records')

head_it()

Message1()

sys.exit()

else:

print("Format not supported must be either 'csv, json or parquet' format.")

sys.exit()Running this with just 10,000 rows took almost 20 seconds.

sh-3.2$ time python3 fkdata_generator --file ../data/fkuser_data --records 10000 --format parquet

Generating Fake Data with '10000' records....

100%|█████████████████████████████████████████████████████████████████████████████| 10000/10000 [00:18<00:00, 551.32it/s]

Sample Data:

Email_Id Name Gamer_Id Device Phone_Number Address City Year Time Link Purchase_Amount

Erin.Brown@montgomery-nichols.com Erin Brown6307 iPhone; CPU iPhone OS 9_3_5 like Mac OS X (009)211-6735x508 48402 Kelly Port Apt. 431\nNorth Brian, PR 81662 Lucasland 2012 06:12:59 https://www.perez.com/ 544

Dennis.King@avila-odom.com Dennis King3027 iPhone; CPU iPhone OS 14_2_1 like Mac OS X (852)065-5680x15345 92220 Denise Ways Apt. 843\nNorth Sierrashire, WV 39014 East Timothyshire 1976 20:42:58 https://ortega.com/ 97

Tammy.Andrews@garrison.com Tammy Andrews8289 iPhone; CPU iPhone OS 6_1_6 like Mac OS X 001-319-013-0740 6603 Justin Prairie Apt. 652\nMorrisbury, ID 31143 Nicholeville 1987 07:41:59 http://www.anderson.org/ 312

Created file: ../data/fkuser_data.parquet

With 10000 records of data

real 0m19.056sI added an append option so I can always add to a file later.

time python3 fkdata_generator --file ../data/fkuser_data --records 20000 --format parquet --append

Generating Fake Data with '20000' records....

100%|█████████████████████████████████████████████████████████████████████████████| 20000/20000 [00:55<00:00, 361.19it/s]

Sample Data:

Email_Id Name Gamer_Id Device Phone_Number Address City Year Time Link Purchase_Amount

Jonathan.Ryan@hopkins.info Jonathan Ryan5852 iPad; CPU iPad OS 3_1_3 like Mac OS X (466)654-6869 70790 Yates Ways\nSchmidtview, CA 45881 South Stevenburgh 1979 02:05:06 https://morgan-barber.com/ 317

Calvin.Bennett@harmon.biz Calvin Bennett9929 iPhone; CPU iPhone OS 7_1_2 like Mac OS X 655.995.8999 720 Andrews Roads\nNew Karafurt, MO 41521 Port Daniel 2010 21:29:23 https://www.myers.info/ 433

Christina.Smith@burke-keller.com Christina Smith2436 iPad; CPU iPad OS 9_3_5 like Mac OS X +1-095-350-1935 14657 Hardin Hills\nGutierrezville, CO 22242 Port Nicoleburgh 2005 19:49:27 http://www.figueroa.biz/ 350

Appended to file: ../data/fkuser_data.parquet

With 20000 more records of data

real 0m56.503s

user 0m55.654s

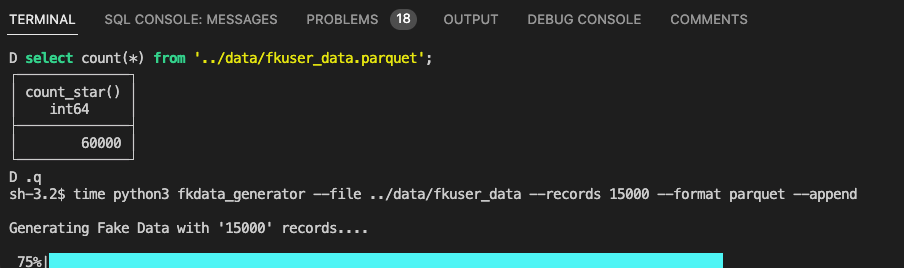

sys 0m0.876sVerify it added the records.

sh-3.2$ ./duckdb

D select count(*) from '../data/fkuser_data.parquet';

┌──────────────┐

│ count_star() │

│ int64 │

├──────────────┤

│ 30000 │

└──────────────┘

No matter what if you double the amount of rows it seems it triples the time it takes. I will add multiprocessing in time but for now it does what I need it do.

You can find this script in github: fkdata_generator

Our first attempt to create a Dataframe and write to a file with our own data generated by numpy ran faster. Although that approach was quicker, it was limited in terms of the range of data available. By using Faker, we can create richer data in a single function. This was useful for testing DuckDB for a wide column with millions of rows. Writing to a file in a json, csv, or parquet format can be useful for certain types of testing. A better option might be to connect directly to a database, which would not be difficult. More to come on this soon.